Research Question:

What are the potentials of applying Sonification in museums? and its potentials when combined with Universal Design/accessibility design, Augmented Realty & Sound visualization (cymatics)?

The decision and development of the research question is explained / is due to the research and experiments done and documented in the research part.

Methodology:

My methodology will be a combination of design by research through design as I will be experimenting, testing and manipulating different types of data between image “pixelated data”, audio “frequencies, sound data” and data humanism as I am working with a set of data “paintings, images, sculptures” of the I have created from the Museum of Modern Egyptian Art.

Research:

Preliminary Research:

I have been interested in sound visualization via cymatics, especially its potentials in 3d when combined with new technologies like augmented reality as a part of my thesis. Later, I found out about the concept of sonification, which is the process of producing audio/sound from different data. One of the most recent interesting applications is the sonification of space done by Nasa in which various data of nebulae, black holes and galaxies are converted into sound. (Vogel 2020)

Below is a link to Whirlpool galaxy sonification:

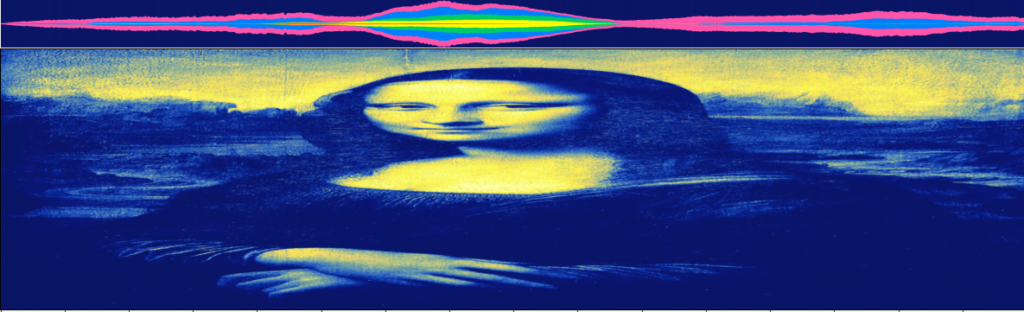

This has inspired me to look further in the opportunities sonification might have in the design/art fields. I came across the sonification of images which is the process of converting data in images “usually the brightness and darkness of the pixels found in the image” into sound waves based where the frequencies correspond to the saturation of the pixels, higher frequencies mean lighter pixels.

I found the following software that can create such process:

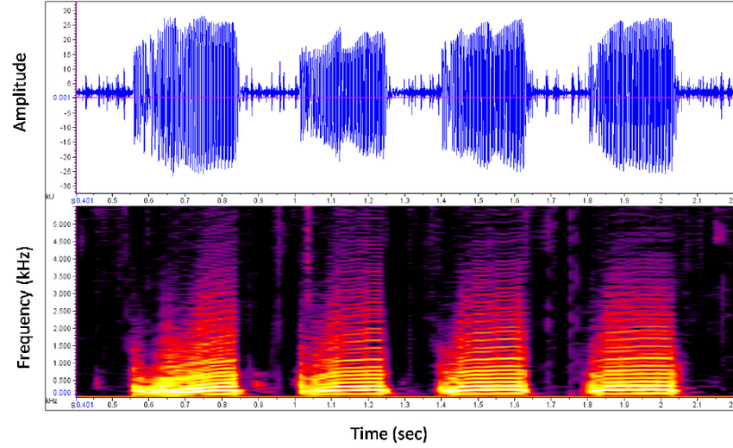

It decodes the noise found in the image based on the pixels. It converts the images into spectrograms. Spectrograms are types of Waveforms that are in fact a type of graph, with time on the X axis and amplitude (or loudness) on the Y. (Tipp 2018)

Figure 1 screen shot of the Mona Lisa painting in photo sounder and its conversion to audio

Figure 2 Illustration of an audio graphs (spectrograms) showing the amplitude & the frequency

It’s a browser-based synthesizer that transforms the image into grey scale and then uses it to create a sine wave creating what’s called by Olivia Jack” it’s creator” the noise of art. The application reads any white information on the black background as note-on. The velocity of the note is dependent on the transparency of the object and pitch depends on its location – at the base of the picture for the low tones, etc. The Image noise is random variation of brightness or color information in images This noise can be use in generating sound (Arblaster 2016)

Conclusion:

From my research I wanted to experiment the usage of the previous software and sonification in transforming museum paintings into audio, visualizing that audio via cymatics, manipulating the painting by creating a video that transforms it and then revealing the visualization using augmented reality techniques.

Testing out the software:

For the testing part I chose 2 completely different images/paintings, different in style, colors…etc. and I have decided to try converting them both into sounds using the 2 software to try and figure out the differences.

Omeka was used as a platform for the images collection and to present their metadata.

Soundcloud was used an audio hosting platform for the audio files.

Conclusion from the experiment:

- The output of each software is different even when using the same image.

- The ratios/dimensions of the images are distorted when imported into both software so I don’t think that the output audio is quite accurate.

Figure 5 Screen shot of the Mona Lisa painting in pixelsynth

Figure 6 Scree shot of Mona Lisa Painting in Photo Sounder

- The output of the image sonification is more of a noise/glitching rather than a pleasant music.

- Maybe there is a way to convert the images into piano notes or specific music instrument notes so that it might be interesting to hear.

- Maybe the sound that can be used in / embedded in the images is a narration/ a story that visitors can listen to upon scanning the image and it can then it can be visualized it can be a way of storytelling for both people with vision deficiencies, they can listen to the story of the painting and people with hearing deficiencies can see the narration and see the sound visualization and can feel more connected and involved with the art.

- I came across this experiment as well:

https://artsandculture.google.com/experiment/sgF5ivv105ukhA

Figure 7 screen shot of Kandinsky’s google music experiment

in this experiment images/paintings are found online and there are clickable buttons that play music at certain parts of the image in response to the music. This inspired me to create paintings with encoded music where people need to scan certain parts of them and then the paintings come to life through AR videos/ image manipulations, sounds and sound visualizations.

Data Set Creation

The process

From the previous experiments I have decided to create a dataset that consists images of paintings and sculptures found in the Museum of Modern Egyptian Art. In attempt to use them later on in creating “a museum within a museum” experience through the sonification of the images where augmented reality and sound can be used to create new and accessible experience for all the visitors.

I have conducted a site visit to the museum and taken the photos of the displays “paintings & sculptures” myself because there was no archive of the museum displays to be found online. Then I have used flourish to create and visualize the data set. I have included mainly art pieces that were found on the ground floor of the museum.

I chose the cards style in flourish to enable the exploration of the displays easily. Below is the link to the flourish visualization:

The data curation was done in both Arabic &English to make it accessible for anyone who wants to re-use it.

Notes from the site visit & the data collection:

- Not all displays found in the museum are properly curated there are a lot that are missing a lot of data including the name of the art piece itself, the date of its creation…. etc.

- There’s no digital archive of the museum displays which meant that I needed to take photos of them myself the thing that might affect any image manipulation / animation process that might depend on the pixels of the images because the lighting in the museum affects the displays. Below is an example of painting that are affected deeply by the light in the museum.

Figure 8 One of Engy Aflaton’s painting, the museum of modern Egyptian Art, Second Floor and the effect of light on it

Experiments on the dataset:

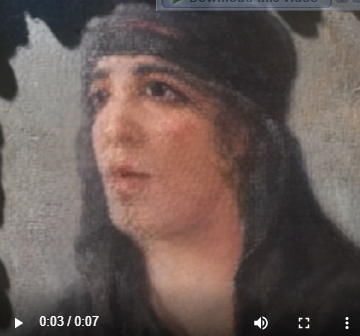

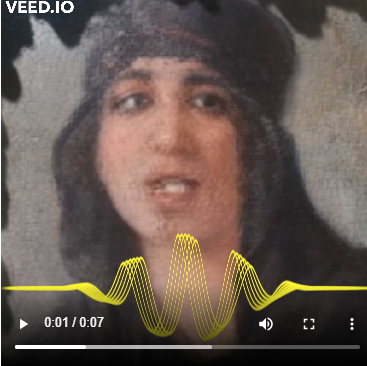

I started trying to manipulate/ animate Yousef Kamel’s “A rural Girl” painting, first I used “HitPaw” which is an online AI face animator to create an animation of the still image, then I used “Veed.io” which is an online audio visualizer to add audio visualization to the video. Finally, I have used artivive bridge to create an AR experience of the painting.

To activate the AR image download artivive mobile app and scan the original image “rural girl image”, P.S. you need to be connected to the internet for it to work.

Figure 9 Yousef Kamel’s original “A rural Girl” painting

Figure 10 Yousef Kamel’s “A rural Girl” painting after being manipulated using “HitPaw” an AI online face animator

Figure 12 Yousef Kamel’s “A rural Girl” after using VEED.IO online sound visualizer

Screen recording of the AR living painting working

Conclusion:

- Creating/manipulating art into fun and sarcastic digital media allows for a new storytelling opportunity which might actually be appealing and encourage younger generations to visit the museum and learn more about the art.

- It’s possible to create a new visualization composition of a still image, a manipulated image/animation, sound and sound visualization.

- The next step is to figure out how to create that with a better quality and visualize the sound via cymatics.

- Adding the cymatics + some floating 3d animated text can create an accessible / universal experience to people with hearing deficiencies.

- I would like to test the possibility of collaborating with be my eyes mobile application, which is a mobile app that allows volunteers to narrate their experience to the visually impaired, maybe a specific narration of the museum can be added to the app.

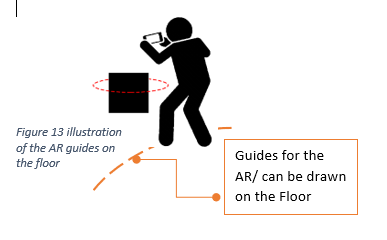

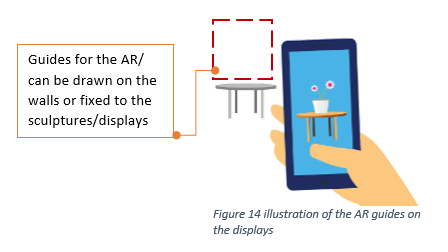

- I am also planning to test the possibilities of adding frame capture guides or floor standing places guideline to existing museum displays that guide visitors on how/where to scan the items to explore the AR experience.

Figure 13 illustration of the AR guides on the floor

Figure 14 illustration of the AR guides on the displays

References:

Arblaster, Simon. 2016. “The Noise of Art: Pixelsynth Can Turn Your Images into Music for Free.” MusicRadar. May 26, 2016. https://www.musicradar.com/news/tech/the-noise-of-art-pixelsynth-can-turn-your-images-into-music-for-free-638423.

Classical Music Reimagined. 2016. “Fun with Spectrograms! How to Make an Image Using Sound and Music.” Www.youtube.com. May 20, 2016. https://www.youtube.com/watch?v=N2DQFfID6eY.

Feaster, Patrick. 2014. “How to ‘Play Back’ a Picture of a Sound Wave.” Griffonage-Dot-Com. November 27, 2014. https://griffonagedotcom.wordpress.com/2014/11/27/how-to-play-back-a-picture-of-a-sound-wave/.

Tipp, Cheryl. 2018. “Seeing Sound: What Is a Spectrogram? – Sound and Vision Blog.” Blogs.bl.uk. September 19, 2018. https://blogs.bl.uk/sound-and-vision/2018/09/seeing-sound-what-is-a-spectrogram.html.

Vogel, Tracy. 2020. “Explore – from Space to Sound.” NASA. September 29, 2020. https://www.nasa.gov/content/explore-from-space-to-sound.